Objects in the AI Mirror Are Closer Than They Appear

There are many things artificial intelligence can't do, but it's evolving thousands of times faster than humans.

“The greatest shortcoming of the human race is man’s inability to understand the exponential function.” — Albert A. Bartlett

ChatGPT started a revolution. Or, it marked a dramatic turning point in one already underway. Opinions vary. Either way, in late 2022, when OpenAI released ChatGPT 3.5, its first publicly accessible large-language model chatbot, it blew people away, performing feats of artificial intelligence years or even decades beyond what experts thought possible at the time. It could answer nearly any question, mimic almost any style, and generate astonishingly usable essays, songs, poems, letters, and recipes within seconds. It conversed in such an eerily human way that in early 2024, ChatGPT passed the famous Turing test, once thought to determine whether a machine is capable of human-like thought. And every subsequent iteration of the program has become more capable. Within five days of its original public launch, ChatGPT had one million users. Within two months, 30 million. Today, just over two years later, it has 300 million users, along with a torrent of competitors flooding the Internet. Revolution, however, is inherently destabilizing, and the rise of AI has accelerated and amplified age-old anxieties about technological unemployment and machines replacing humanity.

It’s easy for those concerned to end up sounding like ignorant troglodytes futilely trying to hold back the tide of the future or fearmongering lunatics raving about Skynet or techno-dystopias. But it’s equally easy to slide into a kind of obstinate denialism about the ways in which AI seems poised to upend many facets of life, with consequences that won’t always be beneficial or benefits that won’t be evenly shared. Talk to the proverbial man on the street, and he finds AI appropriately troubling. Highly educated people in positions of influence, however, tend to play both sides. For all of the science fiction disaster scenarios and AI fear clickbait the mass media pumps out to drive revenue, as individuals, they tend to hold far more sanguine attitudes often expressed by pointing out the many things AI can’t do. But when we take a look at the trend lines of how far AI has come, and the trajectory of where it’s headed, you don’t have to be an alarmist to start getting seriously worried.

From Mythology to Technology

The concept of thinking machines dates back to antiquity, with tales of Hephaestus, the ancient Greek god of invention, creating mechanical servants and a bronze man, what we’d call a robot, named Talos. Similar ideas popped up throughout history, with Medieval legends like the Brazen head, or concepts such as Ismail al-Jazari's automatons and Leonardo da Vinci’s mechanical knight. With the burgeoning field of computation in the mid-20th century, “artificial intelligence” became an actual field of study. And with the rise of science fiction, AI became a fascinating fulcrum of doomsday scenarios or dystopian futures. But it seemed a distant thing, so far off that it hadn’t made the jump from imagination to perturbation in the popular mind.

That changed in 2014 with the publication of philosopher Nick Bostrom’s book Superintelligence: Paths, Dangers, Strategies, which jump-started a new wave of discourse about AI. Led by scholars like Bostrom, scientists such as Stuart Russell, Max Tegmark, Eliezer Yudkowski, and Stephen Hawking, tech entrepreneurs like Elon Musk, and podcasters like Sam Harris and Lex Fridman, the existential risks of artificial intelligence became a topic of serious discussion. Even though AI of the sort we now have was believed to be many years away in the mid-2010s, the fact that so many sober-minded people1 treated the subject with such gravity now appears prescient in hindsight, even if their specific focus seemed rather over-dramatic. As the 2010s wound down, concerns over AI faded into niche obscurity. Then ChatGPT came along and breathed new life into it, replacing the discourse about alignment problems, intelligence explosions, and implacably genocidal paperclip maximizers with comparatively more prosaic and material concerns about human obsolescence.

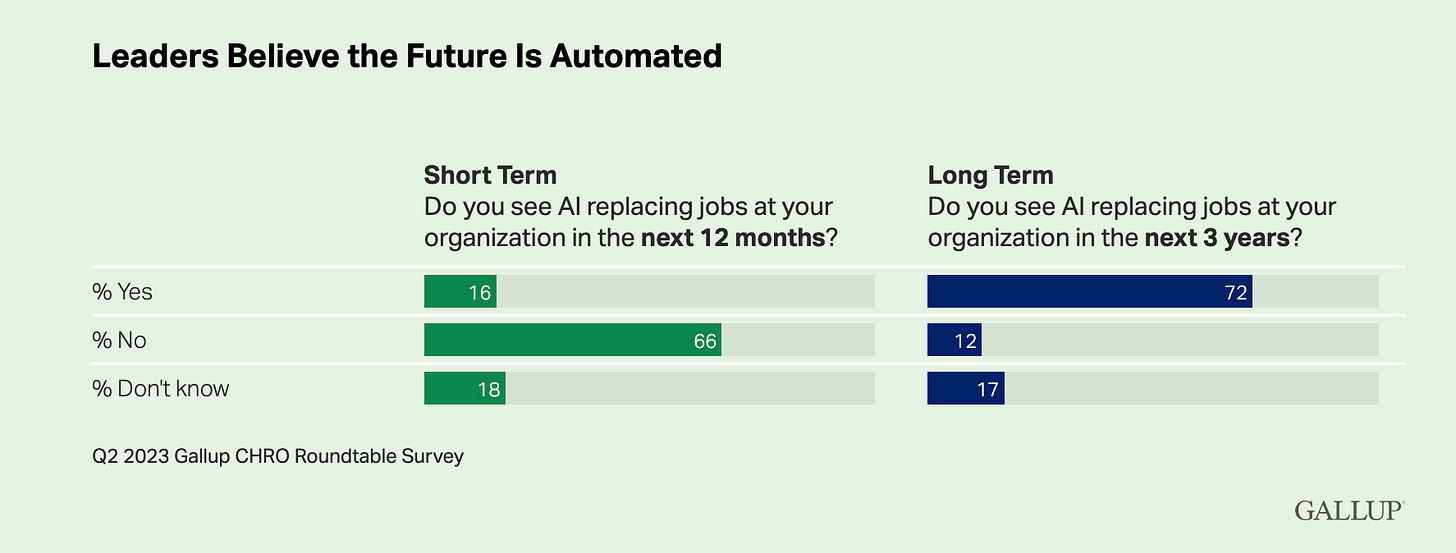

The data on this score is worrying. A study conducted by OpenAI itself, in conjunction with the University of Pennsylvania, found that large-language model AIs could impact as much as 80 percent of the US workforce. We’re already starting to see it. A National Bureau of Economic Research survey found that 28 percent of working-age American adults have used generative AIs for their jobs. Researchers studying online labor markets detected a 24 percent post-ChatGPT decline in job postings and participating clients for text- and programming-related work as well as intensified competition among freelancers in these fields. By February of 2023, 25 percent of surveyed companies had already replaced some employees with ChatGPT. A 2023 Gallup poll found that 72 percent of chief human resource officers see their company replacing jobs with AI over the next three years. By mid-2024, 61 percent of American corporations were planning on using AI to replace human work.

And this is only the beginning. As I covered in a previous article, a 2023 McKinsey report estimates that between 60 to 70 percent of the work done by humans today can potentially be automated with the AI we have right now. To say nothing of the havoc AI is wreaking on education as half of K-12 and college students now use AI for coursework on a regular basis (read: doing their homework, writing their papers, and helping them cheat). AIs are getting smarter as humans are getting dumber. What could go wrong?

A common mantra one hears is “AI won’t replace people — but people with AI will replace people without AI.” What’s left unsaid is that a handful of humans with AI can do the work of a small army of humans without it. If a workforce of 500 is reduced to seven people with AIs, that is a workforce of 493 being replaced with AI. I worked in the insurance business for 13 years and saw this process firsthand even before the AI revolution. When I started in 2010, the agency had seven full-time and two part-time employees working a total of 304 hours per week. By 2019, thanks to a variety of automated technologies, the agency had four full-time and three part-time employees working a total of 190 hours per week — with the same productivity.2 And this was just from simple innovations like automated payment systems, automated toll-free phone numbers, and self-quoting insurance software. Generative AIs are to robo phone lines what a spaceship is to a paper airplane.

Humanity of the Gaps

As AI insinuates itself into ever more areas of work, it becomes indispensable. In my own work as a professional writer and editor, I have come to depend on AI for a variety of tasks. AI has increasingly become my graphic designer, research assistant, part-time proofreader, and just an extra set of eyes to give advice when a sentence seems grammatically correct but feels off somehow. I’ve never used AI to write anything for me. As an editor, however, I’ve caught writers using it. AI writing is, at present, actually quite easy to spot. The writing isn’t awful, in fact it’s better than most people can write. But it’s not quite up to snuff to most professional writers, and the longer the composition, the more glaring its tells become — flowery language, wordiness, and repetitive sentence structure. Even if you specifically ask AI to mimic the style of a particular writer, it produces prose reminiscent of a student padding a school paper with vacuous fluff to get to the required length.

This is where many people in my position confidently proclaim that because AI cannot replace me right now, it will never be able to. There are a plethora of other tasks AI also can’t do, or can’t do well, or can’t do as well as human experts or specialists. This fact provides many people skeptical of AI’s potential to ravage once-human endeavors with an easy out. “Look at all these things it can’t do and just calm down!”

In scores of different domains, we see this narrative repeated endlessly. AI cannot write, direct, or perform as well as Hollywood pros, so it won’t replace screenwriters, directors, or actors. AI won’t make art or write novels or replace healthcare workers or teachers. AI is not authentically human enough, so it won’t replace bosses or organizational leaders. AI won’t replace you. Over and over, we are told that AI “can’t think”, and is “stupid”, “dumb”, and “dumber than you think.” You know who else is dumber than you think? People. And, unlike their silicon counterparts, people aren’t progressing by leaps and bounds every year. We’re the same models that rolled out a few hundred thousand years ago. Meanwhile, the AIs of 2024 are an entirely different and altogether more advanced species than the AIs of 2021. Extrapolate this into the future and the likely reality of our situation becomes clear.

The assumption that technology will never systematically match or exceed human ability in most areas, simply because it hasn’t done so yet, is a dangerous and potentially foolish line of thinking. Given how far AI has advanced in recent years, every declaration of what AI “can’t do” must be affixed with a “...yet.” Taking refuge in AI’s current shortcomings and pretending that it will never improve echoes the fallacious “God of the gaps” reasoning, which claims unexplained phenomena are supernatural, ignoring the many mysteries science has explained.

Does anyone, when they take a moment to really think about it, honestly expect AI to plateau or regress in the coming years? Of course not. As long as humanity avoids bombing itself back to the Stone Age, we have every reason to expect growth. Even in the absence of any big breakthroughs, the simple progression of existing dynamics will lead to incredible improvements in AI. Algorithms will only get more sophisticated. Computing power will only increase. Engineers and programmers, increasingly aided by the very AIs they create, will only continue to iterate and build upon existing systems. And the relative cost of the associated technologies will only continue to come down. AI will have access to ever more data and ever greater reasoning, flexibility, versatility, language skills, common sense — and yes, creativity. Whether AI will ever become truly conscious — or whether it already is — is beyond my ability to discern and beyond the scope of this essay. But AI need not be conscious to replace humans. As we noted above, it’s already happening.

Five years ago, I couldn’t ask an AI to write an article for me. Today, I can. And while it cannot write as well as a professional writer, and has a distinctive style recognizable to a trained eye, it still writes better than the overwhelming majority of people. In another five years, or 10, or 15, who’s to say it won’t write just as well as anyone? Who’s to say it won’t bridge dozens of other gaps in creativity, originality, human authenticity, and so forth? If I were a betting man, I wouldn’t bet against machines mastering, sooner than we expect, nearly every skill that can be performed by someone at a desk or in an office environment. Some other human tasks, however, may be considerably more durable.

The Knowledge Workers’ Conceit

Contrary to the historical concerns over automation and technological unemployment, many blue-collar or hands-on jobs may end up being the most insulated from the rise of AI. Artificial intelligence has far outpaced robotics in ability and potential, despite the latter having had a generations-long head start. It turns out that building robots to perform simple physical tasks like folding towels, unclogging a drain, or administering a back rub are orders of magnitude more costly and impractical than building AI systems to absorb knowledge work — and far less effective.

Ironically, the managerial, creative, and intellectual parts of the human mind are more easily replicated by AI software than the human body’s most basic motor skills are by robotic hardware. Machines were beating the best human chess players in the mid-1990s. By the early 2000s, anyone could purchase unbeatable chess programs for the cost of a Playstation game. A few years later, they were widely available for free. Meanwhile, in 2024, Boston Dynamics’ latest humanoid “Atlas” robot can stack trays with all the grace and speed of a palsied, rheumatic nonagenarian for the low cost of hundreds of thousands of dollars.3

Many writers and content creators believe that what will protect them is their personality. Creators hope that in cultivating a unique brand — where audiences consume their wares not because of excellence in some objective metric, but because they enjoy their style, trust their judgement, or respect their opinions — it won’t matter if they are surpassed by AI in raw ability. Certainly, this is my hope. But I see no reason to suppose that AIs will never become sophisticated enough to inspire loyal audiences or parasocial followings, whether openly as AIs or by posing as human. Indeed, social media is already becoming increasingly populated with virtual influencers such as Lil Miquela, Lu do Magalu, and Aitana Lopez.

So what’s the takeaway here, that the machines are coming and we’re all doomed? Well, not necessarily. But white-collar types are caught between a rock and a hard place. From one side, we see AI making rapid advances in the fields we occupy. From the other side, we have our own reputations to uphold, and no one, least of all educated professionals with public personas, wants to be seen as an alarmist, an old fogey, or a crackpot. For many of us, I think we also don’t want to believe that what we do, as knowledge workers, is actually easier to replicate than the “lowly” tasks performed by the maids at the hotels we hold conferences at. The bell will eventually toll for the maids, plumbers, and line cooks too, but probably not for a while yet. Again, unless we destroy ourselves, technology will only move in one direction — forward.

Once upon a time, the prevailing conviction among chess grandmasters was that because no machine had ever bested them, no machine ever could. Right up until Deep Blue defeated world champion Garry Kasparov between 1996 and 1997, and reality took off its white velvet glove and backhanded them across the face. That white glove is coming for all of us, sooner or later. The best we can do is prepare and brace for the impact, and then make sure the new normal benefits all of humanity. One option I’ve long advocated for is universal basic income. We could have a future where the fruits of technology lift the tides of all boats, or a future where runaway social stratification creates a form of techno-feudalism. The writer William Gibson famously said, “The future is already here — it’s just not very evenly distributed.” That will only become more true as time progresses. It’s up to us to change that. And the first step is to open our eyes and recognize that objects in the mirror are closer than they appear.

See also: “When 65 is Young: The Politics of Life Extension”

Subscribe now and never miss a new post. You can also support the work on Patreon. Please consider sharing this article on your social networks, and hit the like button so more people can discover it. You can reach me at @AmericnDreaming on Twitter, @jamie-paul.bsky.social on Bluesky, or at AmericanDreaming08@Gmail.com.

I tweeted a thread about my experience in 2019 that caught the eye of New York Times tech reporter Kevin Roose, who interviewed me for his book Futureproof: 9 Rules for Humans in the Age of Automation (2021).

I’d love to hear the financing options on that infomercial.

Thanks for the article, Jamie. I think caution is the appropriate approach to AI, especially given the range of morals involved in its use and potential for unintended/unforeseen consequences. More knowledge workers ought to balance their bemusement at AI with the implications for their livelihoods.

Something I wonder about is model collapse with these AI. I’ve seen articles taking about AI degrading when trained on AI- generated samples, and I imagine guardrails are being implemented by developers to prevent this. That said, will AI be or remain immune to the “enshitification” of everything? Will it destabilize the environment to the extent where its viability becomes compromised? I think a lesson from your article would be that we shouldn’t count on that, but I’m curious about your perspective on that.

Happy new year!